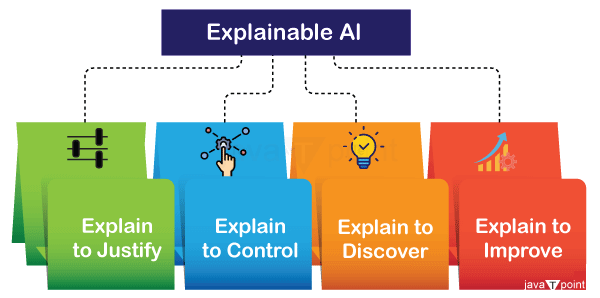

Explainable AIIntroductionExplainable artificial intelligence (XAI) is a bunch of cycles and strategies that permits human clients to grasp and believe the outcomes and result made by AI calculations.  Explainable AI describes an AI model's projected impact and probable biases. It contributes to model correctness, fairness, transparency, and results in AI-powered decisions being made. Explainable AI is critical for an organisation to establish confidence as well as trust when bringing artificial intelligence (AI) models into production. AI explainability also enables an organisation to take an accountable approach to the creation of AI, as artificial intelligence turns out to be further developed, people are tested to understand and follow how the calculation came to an outcome. The entire computational process is converted into what is commonly called a difficult-to-understand "black box." These black box models are directly constructed from the data. Moreover, neither the specifics of what is happening inside them nor the way the artificial intelligence computation arrived at a certain result can be understood or made sense of by the experts or information researchers who do the computation. There are many benefits to understanding how an artificial intelligence empowered framework has prompted a particular result. Reasonableness can assist designers with guaranteeing that the framework is functioning true to form, it very well may be important to satisfy administrative guidelines, or it very well may be significant in permitting those impacted by a choice to challenge or change that result. Why Explainable AI matters?It is urgent for an association to have a full comprehension of the artificial intelligence dynamic cycles with model checking and responsibility of artificial intelligence and not to indiscriminately trust them. Reasonable artificial intelligence can help people comprehend and make sense of AI and Machine learning calculations, profound learning and brain organizations. ML models are frequently spoken of as black boxes that cannot be interpreted. Deep learning neural networks can be complex for humans to comprehend. Bias, frequently related to race, gender, age, or region, has long been a concern when training AI models. Furthermore, AI model performance might drift or decline due to differences between production and training data. This makes it critical for a company to regularly monitor and maintain models in order to increase AI explainability while also analysing the financial effect of utilising such algorithms. Explainable AI also promotes end-user confidence, model auditability, and productive usage of AI. It also reduces the compliance, legal, security, and reputational concerns of manufacturing AI. Explainable AI is a major criterion for adopting responsible AI, an approach for large-scale AI deployment in real-world organisations that prioritises justice, model explainability, and accountability. To promote responsible AI adoption, organisations must include ethical concepts into AI applications and procedures by developing AI systems based on trust and transparency. How Explainable AI Works?Comprehending machine learning and explainable AI allow companies to get insight into the AI technology's underlying decision-making process and implement enhancements accordingly. Explainable AI has the potential to improve user satisfaction by improving the end user's trust in the AI's decision-making abilities. When will AI systems be able to make decisions with enough confidence for you to be able to rely on them, and how will they correct mistakes when they do? As AI advances, ML procedures must continue to be understood and regulated to ensure correct AI model outcomes. Let's look at the differences between AI and XAI, the methodologies and strategies used to convert AI to XAI, and the distinction between interpreting and explaining AI processes. Comparing AI with XAI:What is the distinction between "regular" AI and explainable AI? Explainable artificial intelligence XAI employs specialised methodologies and approaches to ensure that every choice made throughout the ML procedure can be identified and explained. AI, on the other hand, frequently uses an ML algorithm to get a result, but the designers of artificial intelligence do not fully grasp how the algorithm arrived at that conclusion. This makes it difficult to check for correctness and results in a loss of control, accountability, and auditability (the ability of an auditor to get accurate results when they exam a company's financial reports). Explainable AI Methods:The structure of Explainable ai (XAI) approaches comprises of three major strategies. Predictive, accuracy and accountability address technological requirements, whereas decision understanding addresses human demands. Explainable AI, particularly explainable machine learning, will be critical for future war fighters to comprehend, trust, and successfully handle an emerging generation of highly intelligent machine companions.

Explainability versus interpretability in AIIn artificial intelligence, explainability ai is the capacity for clarifying a model's decision-making process. Interpretability is more comprehensive and includes knowing the internal workings of the model. Explainability concentrates on results, but interpretability explores the structure and operations of the model to provide more profound understanding of how it works. The step to which an observer can comprehend the inspiration behind a decision is known as its interpretability. It is the achievement rate that people can anticipate for the consequence of an artificial intelligence yield, while logic goes above and beyond and takes a gander at how the simulated intelligence showed up at the outcome.  How does explainable AI relate to responsible AI?Explainable AI intelligence and mindful artificial intelligence have comparable goals, yet various methodologies. The main differences between responsible AI and explainable AI are as follows:

Benefits of explainable AI

Five considerations for explainable AI

ConclusionIn conclusion, giving explainable AI top priority promotes openness and confidence by providing an explanation for ai model choices. Achieving complete interpretability is still a difficult task, though. In order to deploy AI in an ethical and practical manner, balance between model performance and transparency is essential. This will ensure accountability and help AI technologies be accepted by society more broadly. Next TopicConceptual Dependency in AI |