Difference between AlexNet and GoogleNetIn recent years, deep learning has altered the field of computer vision, enabling computers to perceive and figure out visual information at uncommon levels. Convolutional Neural Networks (CNNs) play had a crucial impact on this change, with a few groundbreaking designs leading the way. Two of the most influential CNN structures are AlexNet and GoogleNet (InceptionNet). The two models have altogether added to the progression of image classification tasks, yet they contrast in their structures and design principles. In this article, we will dive into the critical differences between AlexNet and GoogleNet, exploring their structures, design decisions, and execution. Major Differences between AlexNet and GoogleNet

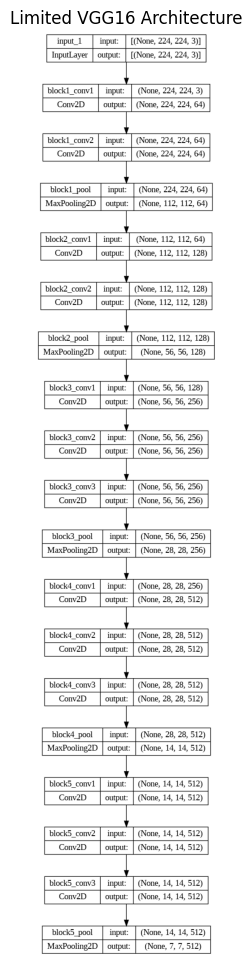

What is AlexNet?AlexNet is a noteworthy convolutional neural network (CNN) architecture created by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton. It was introduced in 2012 and made critical progress in the ImageNet Large Scope Visual Recognition Challenge (ILSVRC) by essentially beating different methodologies. AlexNet was the principal CNN to show the viability of deep learning for image order tasks, denoting a defining moment in the field of computer vision. 1. Architecture Released in 2012, AlexNet was a spearheading CNN that won the ImageNet Large Scope Visual Recognition Challenge (ILSVRC) with critical room for error. It comprises five convolutional layers followed by three completely associated layers. The utilization of ReLU (Redressed Direct Unit) actuation and neighborhood reaction standardization (LRN) added to its prosperity. AlexNet additionally presented the idea of involving GPUs in preparing, which sped up the growing experience altogether. 2. Network Profundity: With eight layers (five convolutional and three completely associated layers), AlexNet was viewed as deep at the hour of its presentation. Notwithstanding, contrasted with current designs, it is generally shallow, restricting its capacity to catch mind-boggling elements and examples in extremely complex datasets. 3. Computational Productivity: While AlexNet's presentation of GPU preparation sped up the educational experience, it was still computationally costly because of its deeper completely associated layers and restricted utilization of parallelization. 4. Overfitting: Because of its moderately shallow design and a huge number of boundaries, AlexNet was more inclined to overfitting, particularly on more modest datasets. Strategies like dropout were subsequently acquainted to moderate this issue.  5. Training: To train AlexNet, the creators utilized the ImageNet dataset, which contains more than 1,000,000 named images from 1,000 classifications. They utilized stochastic angle drop (SGD) with energy as the improvement calculation. During training, information expansion methods like arbitrary editing and flipping were applied to expand the size of the training dataset and further develop generalization. The training system was computationally requested, and AlexNet's utilization of GPUs for equal handling ended up being essential. Training AlexNet on a double GPU framework required about seven days, which was a critical improvement contrasted with customary computer processor-based training times. 6. Results: In the ImageNet 2012 rivalry, AlexNet accomplished a noteworthy top-5 mistake pace of around 15.3%, beating different methodologies overwhelmingly. The outcome of AlexNet started a flood of interest in deep learning and CNNs, prompting a change in the computer vision local area's concentration toward additional complicated and deeper neural networks. 7. Convolutional Layer Setup: The convolutional layers in AlexNet are organized in a basic succession, with periodic max-pooling layers for downsampling. This clear engineering was momentous at that point, yet it restricted the organization's capacity to catch complex progressive elements. 8. Dimensionality Decrease: AlexNet involves max-pooling layers for downsampling, lessening the spatial components of the element maps. This aids in diminishing the computational weight and controlling overfitting. 9. Model Size and Complexity: While AlexNet was viewed as profound at that point, it is somewhat more modest and less complicated contrasted with later designs. This straightforwardness made it more obvious and carry out. 10. Utilization of Assistant Classifiers: To resolve the issue of evaporating angles during preparation, AlexNet presented the idea of helper classifiers. These extra classifiers were joined to moderate layers and gave angle signs to before layers during backpropagation. 11. Impact on Research Direction: The outcome of AlexNet denoted a huge change in the field of PC vision. It incited scientists to investigate the capability of profound learning for different picture-related assignments, prompting the fast improvement of further developed CNN designs. What is GoogleNet?GoogleNet, otherwise called Inception v1, is a CNN architecture created by the Google Brain group, especially by Christian Szegedy, Wei Liu, and others. It was introduced in 2014 and won the ILSVRC with further developed precision and computational productivity. GoogleNet's architecture is described by its deep design, which comprises 22 layers, making it one of the first "exceptionally deep" CNNs. 1. Architecture GoogleNet (Inception v1): Presented in 2014, GoogleNet is essential for the Inception group of CNNs. It is known for its deep design involving 22 layers (inception modules). The vital development of GoogleNet is the inception module, which considers equal convolutions of various channel sizes inside a similar layer. This decreased computational intricacy while keeping up with precision, making GoogleNet more effective than AlexNet. 2. Network Profundity: GoogleNet's inception modules are considered an essentially deeper design without expanding computational expenses. With 22 layers, GoogleNet was one of the main CNNs to show the benefits of expanded network profundity, prompting further developed exactness and power. 3. Computational Productivity: The inception modules in GoogleNet are considered a more productive usage of computational assets. By utilizing equal convolutions inside every inception block, GoogleNet diminished the number of boundaries and calculations, making it more attainable for continuous applications and conveying on asset-compelled gadgets. 4. Overfitting: The deep however effective design of GoogleNet essentially diminished overfitting, permitting it to perform better on more modest datasets and move learning situations.  5. Training: The training of GoogleNet additionally elaborates on utilizing the ImageNet dataset, and comparable information increase procedures were utilized to upgrade generalization. Be that as it may, because of its deeper architecture, GoogleNet required more computational assets than AlexNet during training. The development of inception modules permitted GoogleNet to find some kind of harmony between profundity and computational effectiveness. The equal convolutions inside every inception block decreased the number of calculations and boundaries altogether, making training more achievable and effective. 6. Results: GoogleNet accomplished a great top-5 blunder pace of around 6.67% in the ImageNet 2014 contest, outperforming AlexNet's presentation. The deep however proficient architecture of GoogleNet exhibited the capability of deeper neural networks while keeping up with computational achievability, making it more engaging for true applications. 7. Convolutional Layer Setup: GoogleNet presented the idea of beginning modules, which comprise numerous equal convolutional layers of various channel sizes. This plan permits GoogleNet to catch highlights at different scales and altogether works on the organization's capacity to remove significant elements from different degrees of deliberation. 8. Dimensionality Decrease: notwithstanding customary max-pooling, GoogleNet utilizes dimensionality decrease methods like 1x1 convolutions. These more modest convolutions are computationally less escalated and assist with diminishing the number of elements while safeguarding fundamental data. 9. Model Size and Complexity: GoogleNet's origin modules bring about a more profound design with fundamentally more layers and boundaries. This intricacy, while offering further developed precision, can likewise make the organization more testing to prepare and calibrate. 10. Utilization of Assistant Classifiers: GoogleNet refined the idea of assistant classifiers by incorporating them inside the initiation modules. These assistant classifiers advance the preparation of more profound layers and upgrade the angle stream, adding to more steady and effective preparation. 11. Impact on Research Direction: GoogleNet's beginning modules presented the possibility of effective component extraction at various scales. This idea impacted the plan of resulting designs, empowering analysts to zero in on advancing organization profundity and computational productivity while keeping up with or further developing precision. ConclusionBoth AlexNet and GoogleNet lastingly affect the field of computer vision and deep learning. AlexNet exhibited the capability of CNNs for image recognition tasks and set up for future progressions. Then again, GoogleNet presented the idea of origin modules, making them ready for more effective and deeper CNN structures. While AlexNet and GoogleNet have their special assets, the field of deep learning has developed fundamentally since their presentations. Present-day designs, like ResNet, DenseNet, and EfficientNet, have additionally pushed the limits of exactness, productivity, and generalization. As analysts proceed to improve and expand on these essential models, the fate of computer vision holds considerably more noteworthy commitment and additional intriguing prospects. Next TopicHow to Use LightGBM in Python |