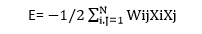

Hopfield NetworkHopfield network is a special kind of neural network whose response is different from other neural networks. It is calculated by converging iterative process. It has just one layer of neurons relating to the size of the input and output, which must be the same. When such a network recognizes, for example, digits, we present a list of correctly rendered digits to the network. Subsequently, the network can transform a noise input to the relating perfect output. In 1982, John Hopfield introduced an artificial neural network to collect and retrieve memory like the human brain. Here, a neuron is either on or off the situation. The state of a neuron(on +1 or off 0) will be restored, relying on the input it receives from the other neuron. A Hopfield network is at first prepared to store various patterns or memories. Afterward, it is ready to recognize any of the learned patterns by uncovering partial or even some corrupted data about that pattern, i.e., it eventually settles down and restores the closest pattern. Thus, similar to the human brain, the Hopfield model has stability in pattern recognition. A Hopfield network is a single-layered and recurrent network in which the neurons are entirely connected, i.e., each neuron is associated with other neurons. If there are two neurons i and j, then there is a connectivity weight wij lies between them which is symmetric wij = wji . With zero self-connectivity, Wii =0 is given below. Here, the given three neurons having values i = 1, 2, 3 with values Xi=±1 have connectivity weight Wij.  Updating rule:Consider N neurons = 1, … , N with values Xi = +1, -1. The update rule is applied to the node i is given by: If hi ≥ 0 then xi → 1 otherwise xi → -1 Where hi = Thus, xi → sgn(hi), where the value of sgn(r)=1, if r ≥ 0, and the value of sgn(r)=-1, if r < 0. We need to put bi=0 so that it makes no difference in training the network with random patterns. We, therefore, consider hi= We have two different approaches to update the nodes: Synchronously: In this approach, the update of all the nodes taking place simultaneously at each time. Asynchronously: In this approach, at each point of time, update one node chosen randomly or according to some rule. Asynchronous updating is more biologically realistic. Hopfield Network as a Dynamical system:Consider, K = {-1, 1} N so that each state x £ X is given by xi £ { -1,1 } for 1 ≤ I ≤ N Here, we get 2N possible states or configurations of the network. We can describe a metric on X by using the Hamming distance between any two states: P(x, y) = # {i: xi≠yi} N Here, P is a metric with 0≤H(x,y)≤ N. It is clearly symmetric and reflexive. With any of the asynchronous or synchronous updating rules, we get a discrete-time dynamical system. The updating rule up: X → X describes a map. And Up: X → X is trivially continuous. Example:Suppose we have only two neurons: N = 2 There are two non-trivial choices for connectivities: w12 = w21 = 1 w12= w21 = -1 Asynchronous updating: In the first case, there are two attracting fixed points termed as [-1,-1] and [-1,-1]. All orbit converges to one of these. For a second, the fixed points are [-1,1] and [1,-1], and all orbits are joined through one of these. For any fixed point, swapping all the signs gives another fixed point. Synchronous updating: In the first and second cases, although there are fixed points, none can be attracted to nearby points, i.e., they are not attracting fixed points. Some orbits oscillate forever. Energy function evaluation:Hopfield networks have an energy function that diminishes or is unchanged with asynchronous updating. For a given state X ∈ {−1, 1} N of the network and for any set of association weights Wij with Wij = wji and wii =0 let,  Here, we need to update Xm to X'm and denote the new energy by E' and show that. E'-E = (Xm-X'm ) ∑i≠mWmiXi. Using the above equation, if Xm = Xm' then we have E' = E If Xm = -1 and Xm' = 1 , then Xm - Xm' = 2 and hm= ∑iWmiXi ? 0 Thus, E' - E ≤ 0 Similarly if Xm =1 and Xm'= -1 then Xm - Xm' = 2 and hm= ∑iWmiXi < 0 Thus, E - E' < 0. Note: |