AI Tech StackArtificial Intelligence (AI) has stepped into a revolution in the domain of problem-solving and automation. To realise a triumphant AI integration, it becomes important to possess an effective AI Technological Store, which is a union of interlinked and innovative technologies and instruments operating in harmony to harness the effective capabilities of AI. This composition delves deep into the domain of AI Technology Stacks, comprehensively inspecting their constituent elements, import, and the difficult challenges they involve. In this ever-changing scenery of technology, the combination of AI in the sphere of software engineering stands as an insightful force, fundamentally reshaping the methodologies employed in software conception and deployment. Introduction to AI Tech StackIn the domain of artificial intelligence, the AI Technology Stack, sometimes referred to as the AI software section, denotes the foundational infrastructure required for the cultivation and deployment of AI. This complicated collection of tools provides provision to the needs of data scientists, engineers, and developers, offering an exhaustive array of elements indispensable for creating, developing, and operationalising AI models. - Data: This layer includes data collection, cleaning, preprocessing, and storage. It is the foundation of the AI stack, as AI systems require substantial amounts of data to learn from and make decisions.

- Algorithms: At this layer, mathematical models and algorithms are developed to help AI systems extract patterns and insights from data. It encompasses machine learning and other statistical models.

- Infrastructure: This layer includes hardware and software components that support the operation of AI systems and other specialised hardware virtualisation containerisation tools.

- Platforms: The frameworks and tools required to create and implement AI applications are offered by this layer. It contains frameworks and libraries, and programming languages like scikit-learn, PyTorch, and TensorFlow.

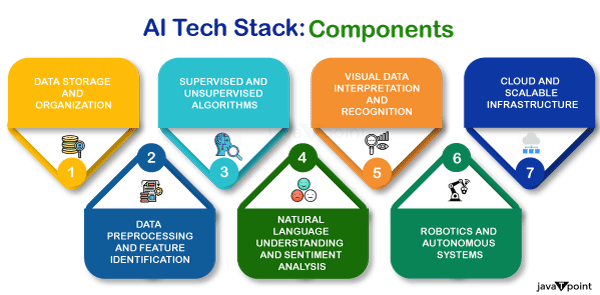

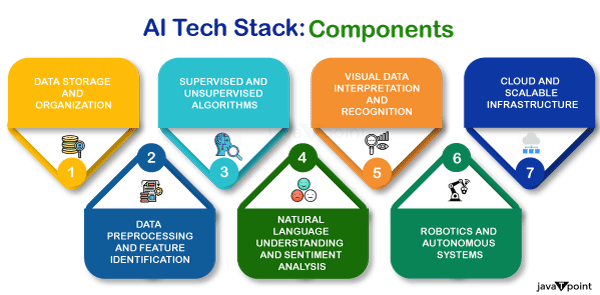

The Components of an AI Tech Stack - Data Storage and Organization

Before any AI processing occurs, the foundational step is the secure and efficient storage of data. Storage solutions such as SQL databases for structured data and NoSQL databases for unstructured data are essential. For large-scale data, Big Data solutions like Hadoop's HDFS and Spark's in-memory processing become necessary. The type of storage selected directly impacts the data retrieval speed, which is crucial for real-time analytics and machine learning pipelines. - Data Preprocessing and Feature Identification: The Bridge to Machine Learning

Following storage is the meticulous task of data preprocessing and feature identification. Preprocessing involves normalization, handling missing values, and outlier detection?these steps are performed using libraries such as Scikit-learn and Pandas in Python. Feature identification is pivotal for dimensionality reduction and is executed using techniques like Principal Component Analysis (PCA) or Feature Importance Ranking. These cleansed and reduced features become the input for machine learning algorithms, ensuring higher accuracy and efficiency. - Supervised and Unsupervised Algorithms: The Core of Data Modeling

Once preprocessed data is available, machine learning algorithms are employed. Algorithms like Support Vector Machines (SVMs) for classification, Random Forest for ensemble learning, and k-means for clustering serve specific roles in data modeling. The choice of algorithm directly impacts computational efficiency and predictive accuracy, and therefore, must be in line with the problem's requirements. - Natural Language Understanding and Sentiment Analysis: Deciphering Human Context

When it comes to interpreting human language, Natural Language Processing (NLP) libraries like NLTK and spaCy serve as the foundational layer. For more advanced applications like sentiment analysis, transformer-based models like GPT-4 or BERT offer higher levels of understanding and context recognition. These NLP tools and models are usually integrated into the AI stack following deep learning components for applications requiring natural language interaction. - Visual Data Interpretation and Recognition: Making Sense of the World

In the areas of visual data, computer vision technologies such as OpenCV are indispensable. Advanced applications may leverage CNNs for facial recognition, object detection, and more. These computer vision components often work in tandem with machine learning algorithms to enable multi-modal data interpretation. - Robotics and Autonomous Systems: Real-world Applications

For physical applications like robotics and autonomous systems, sensor fusion techniques are integrated into the stack. Algorithms for Simultaneous Localization and Mapping (SLAM) and decision-making algorithms like Monte Carlo Tree Search (MCTS) are implemented. These elements function alongside the machine learning and computer vision components, driving the AI's capability to interact with its environment. - Cloud and Scalable Infrastructure: The Bedrock of AI Systems

The entire AI tech stack often operates within a cloud-based infrastructure like AWS, Google Cloud, or Azure. These platforms provide scalable, on-demand computational resources that are vital for data storage, processing speed, and algorithmic execution. The cloud infrastructure acts as the enabling layer that allows for the seamless and integrated operation of all the above components.

ConclusionIn conclusion, AI Tech Stacks are the backbone of modern AI development. They facilitate the creation of intelligent solutions by providing a structured approach to data collection, processing, and model deployment. To stay competitive, organisations must invest in building robust AI Tech Stacks that can adapt to changing needs and technologies.

|